Hey Guys,

FatDBA free stickers are here. Share me your address on fatdba@fatdba.com and I will send them free to your address and would love to see them shining on your laptop … 🙂

Thanks

The ‘FatDBA’

Posted by FatDBA on July 25, 2023

Hey Guys,

FatDBA free stickers are here. Share me your address on fatdba@fatdba.com and I will send them free to your address and would love to see them shining on your laptop … 🙂

Thanks

The ‘FatDBA’

Posted in Uncategorized | Leave a Comment »

Posted by FatDBA on June 25, 2023

Recently while applying a WebLogic PSU Patch in an Oracle Hyperion-Essbase 14.x environment we encountered a weird issue where the patching utility was exceptionally slow while doing a rollback. We were using BSU (BEA Smart Update) utility to patch middleware homes and It took almost 95 minutes to rollback a patch in one of the lower environment. The slowness was most noticeable when its checking for conflicts in the system. It was surprising, I mean though BSU is an old utility (pre OPatch) but still is still the times are quite high to rollback a patch and was not acceptable.

I have even increased the memory for the BSU and few more things, but none of them worked and was dead slow. I was lucky that I found an old metalink note (ID 2271366.1) which is about the same issue where the WLS BSU (Smart Update) may take a very long time to apply patches. This is especially true with larger patches such as the Patch Set Update (PSU).

As per recommendation, we’d applied two of below mentioned smart update utility patches and next time it took hardly 5 minutes to rollback the same patch in a different environment. Both of the patches updates some of the critical BSU program modules (patch clients, patch common and common dev modules) and corrected their codepatch issues by applying a smart update V4.

Patch 12426828 (SMARTUPDATE 3.3 INSTALLER PLACEHOLDER)

Patch 31136426 (SMART UPDATE TOOL ENHANCEMENT V4)

Hope It Helped!

Prashant Dixit

Posted in Uncategorized | Leave a Comment »

Posted by FatDBA on June 24, 2023

I am excited to share that I will be speaking this year in Oracle Enterprise Manager Technology Forum 2023 😀 🤘

I will present on how to leverage Database Real Application Testing features to speed up adoption of new technologies or during database and infrastructure upgrades, migration, consolidation, and configuration changes without worrying about the performance and stability of your production workload.

Its going to be interesting. Register for all 3 days!

https://go.oracle.com/LP=136586?elqCampaignId=448599

Hope It Helped!

Prashant Dixit

Posted in Uncategorized | 1 Comment »

Posted by FatDBA on May 6, 2023

Hi All,

Oracle has added tons of new features into Oracle 23c. They have tried to add lot of cool features into different subjects or areas, Oracle sharding has also got one good addition in the new version. One of the latest sharing related optimizer related parameter added into 23c is OPTIMIZER_CROSS_SHARD_RESILIENCY which enables flexible execution of cross-shard SQL statements.

SQL> select name, value, DESCRIPTION from v$parameter where name like 'optimizer_cross_shard_resiliency';

NAME VALUE DESCRIPTION

------------------------------------ ------------ ----------------------------------------------------------------

optimizer_cross_shard_resiliency FALSE enables resilient execution of cross shard queries

As far as a cross-shard statements, It is a query that must scan data from more than one shard, and the processing on each shard is independent of any other shard. A multi-shard query maps to more than one shard and the coordinator might need to do some processing before sending the result to the client. Horizontally scalable cross-shard query coordinators can improve performance and availability of read-intensive cross-shard queries.

Coming back to the parameter, when this parameter is set to TRUE then and a cross-shard query fails on one or more shards, the query execution continues on the Oracle Data Guard standbys of the failed shards, but this should be well tested before implementing in production environment as there might be some performance overhead associated with cross shard resiliency.

For example, in below sharding configuration we have two primary shards and each of them has its READ ONLY standby available in a different zone and shards.

GDSCTL>config shard

Name Shard Group Status State Region Availability

----- -------------------- ------- --------- -------- ---------------

sh1 primary_canada_shg Ok Deployed canada ONLINE

sh2 primary_canada_shg Ok Deployed canada ONLINE

sh3 standby_india_shg Ok Deployed india READ ONLY

sh4 standby_india_shg Ok Deployed india READ ONLY

GDSCTL>In case when doing a multi-shard query, the query coordinator will assist queries that need data from more than one shard, and in case if any of the primary shard fails to respond, the coordinator will check its DG Standby to furnish the request.

Hope It Helped!

Prashant Dixit

Posted in Uncategorized | Tagged: oracle | Leave a Comment »

Posted by FatDBA on April 14, 2023

Hi Guys,

Oracle 23c is full of great features, one of the outstanding feature added to the version is the Automatic Transaction Rollback … Means no more long transaction level locking or the infamous event ‘enq: TX row lock contention‘ or the pessimistic locking 🙂

In case of a row level locking or pessimistic level locking where a single row of a table was locked by one of the following statements INSERT, UPDATE, DELETE, MERGE, and SELECT … FOR UPDATE. The row level lock from first session will exist it performs the rollback or a commit. This situation becomes severe in some case i.e. The application modifies some rows but doesn’t commit or terminate the transaction because of an exception in the application. Traditionally, in such cases the database administrator have to manually terminate the blocking transaction by killing the parent session.

Oracle 23c has come up with a brilliant feature which it implements through a session settings to control the transaction priority. Transaction priority (TXN_PRIORITY) is set at session level using ALTER SESSION command. Once the transaction priority is set, it will remain the same for all the transactions created in that session. This parameter specifies a priority (HIGH, MEDIUM, or LOW) for all transactions in a user session. When running in ROLLBACK mode, you can track the performance of Automatic Transaction Rollback by monitoring the following statistics:

TXN_AUTO_ROLLBACK_HIGH_PRIORITY_WAIT_TARGET This param specifies the max number of seconds that a HIGH priority txn will wait for a row lock. Similarly, there is another parameter for MEDIUM classed statements TXN_AUTO_ROLLBACK_MEDIUM_PRIORITY_WAIT_TARGET which specifies the max number of seconds that a MEDIUM priority txn will wait for a row lock.

Lets do a quick demo to explain this behavior in details.

I have created a small table with two rows and two columns and will use it for this demo to test automatic txn rollback features. To show a quick demo, I will set txn_auto_rollback_high_priority_wait_target to a lower value of 15 seconds. Will issue an UPDATE statement from the first session after setting the TXN_PRIORITY to ‘LOW‘ at the session level and will open a parallel session (session 2) and issue the same statement where the it will try to modify the same row already in exclusive lock mode by session 1.

--------------------------------------

-- SESSION 1

--------------------------------------

[oracle@mississauga ~]$ sqlplus / as sysdba

SQL*Plus: Release 23.0.0.0.0 - Developer-Release on Fri Apr 14 22:46:34 2023

Version 23.2.0.0.0

Copyright (c) 1982, 2023, Oracle. All rights reserved.

Connected to:

Oracle Database 23c Free, Release 23.0.0.0.0 - Developer-Release

Version 23.2.0.0.0

SQL>

SQL> select * from dixit;

ID NAME

---------- --------------------

999 Fatdba

101 Prashant

SQL> select to_number(substr(dbms_session.unique_session_id,1,4),'XXXX') mysid from dual;

MYSID

----------

59

SQL> show parameter TXN_AUTO_ROLLBACK_HIGH_PRIORITY_WAIT_TARGET

NAME TYPE VALUE

-------------------------------------------- ----------- ------------------------------

txn_auto_rollback_high_priority_wait_target integer 15

SQL> alter session set TXN_PRIORITY=LOW;

Session altered.

SQL> show parameter TXN_PRIORITY

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

txn_priority string LOW

-- I will now issue update and don't issue ROLLBACK or COMMIT

SQL> update dixit set id=101010101 where name='Fatdba';

1 row updated.

SQL >

Okay so the stage is set! We’ve already ran an UPDATE statement on the table from SESSION 1 (SID : 59) and I will open a new session (session 2) and issue the same statement, but here the txn_priority is set to its default ‘HIGH‘ and we’ve already set txn_auto_rollback_high_priority_wait_target to 15 seconds earlier.

--------------------------------------

-- SESSION 2

--------------------------------------

[oracle@mississauga ~]$ sqlplus / as sysdba

SQL*Plus: Release 23.0.0.0.0 - Developer-Release on Fri Apr 14 22:46:34 2023

Version 23.2.0.0.0

Copyright (c) 1982, 2023, Oracle. All rights reserved.

Connected to:

Oracle Database 23c Free, Release 23.0.0.0.0 - Developer-Release

Version 23.2.0.0.0

SQL>

SQL> select to_number(substr(dbms_session.unique_session_id,1,4),'XXXX') mysid from dual;

MYSID

----------

305

SQL> show parameter TXN_PRIORITY;

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

txn_priority string HIGH

-- Now this session will go into blocking state.

SQL> update dixit set id=0 where name='Fatdba';

...

.....

Alright, so session 2 (SID : 305) with txn_priority=HIGH is now blocked, as the row was first locked in exclusive mode by session 1 (SID : 59), but we’ve set TXN_PRIORITY=LOW (at session level) and system level change of TXN_AUTO_ROLLBACK_HIGH_PRIORITY_WAIT_TARGET to 15 seconds,

Lets query the database and see what is waiting on what ?? You will see SID 305 (session 2) is waiting for the txn level lock and waiting on event ‘enq: TX – row lock (HIGH priority)‘. BTW, this also a new event added into Oracle 23c for sessions waiting with HIGH priorities, other two are for LOW and MEDIUM priorities.

SQL>

SQL> select event#, name, WAIT_CLASS from v$event_name where name like '%TX - row%';

EVENT# NAME WAIT_CLASS

---------- ---------------------------------------------------------------- ----------------------------------------------------------------

340 enq: TX - row lock contention Application

341 enq: TX - row lock (HIGH priority) Application

342 enq: TX - row lock (MEDIUM priority) Application

343 enq: TX - row lock (LOW priority) Application

SQL>

-----------------------------------------------------------------

-- Contention details (What has blocked what ?)

-----------------------------------------------------------------

SQL>

INST_ID SID SERIAL# USERNAME SQL_ID PLAN_HASH_VALUE DISK_READS BUFFER_GETS ROWS_PROCESSED EVENT

---------- ---------- ---------- -------------------------------------------------------------------------------------------------------------------------------- ------------- --------------- ---------- ----------- -------------- ----------------------------------------------------------------

OSUSER STATUS BLOCKING_SE BLOCKING_INSTANCE BLOCKING_SESSION PROCESS MACHINE PROGRAM

-------------------------------------------------------------------------------------------------------------------------------- -------- ----------- ----------------- ---------------- ------------------------ ---------------------------------------------------------------- ------------------------------------------------------------------------------------

MODULE ACTION LOGONTIME LAST_CALL_ET SECONDS_IN_WAIT STATE

---------------------------------------------------------------- ---------------------------------------------------------------- ------------------- ------------ --------------- -------------------

SQL_TEXT

----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

RUNNING_SIN

-----------

1 305 15926 SYS 9jwbjqg195zdw 2635034114 0 6 0 enq: TX - row lock (HIGH priority)

oracle ACTIVE VALID 1 59 8808 mississauga.candomain sqlplus@mississauga.candomain (TNS V1-V3)

sqlplus@mississauga.candomain (TNS V1-V3) 04-14-2023 22:46:35 12 13 WAITING

update dixit set id=00000000 where name='Fatdba'

00:00:12

The session 2 (SID 305) will wait for 15 seconds and database will automatically snipes session 1 (SID 59) due to LOW priority and UPDATE issued by Session 2 will persist, whereas session 1 (SID 59) and will throw “ORA-03135: connection lost contact”.

-- SESSION 1 with SID 59

SQL>

SQL> select * from dixit;

select * from dixit

*

ERROR at line 1:

ORA-03135: connection lost contact

Process ID: 8843

Session ID: 59 Serial number: 31129

-- SESSION 2 with SID 305

SQL> update dixit set id=0 where name='Fatdba';

1 row updated.

SQL> select * from dixit;

ID NAME

---------- --------------------

0 Fatdba

101 Prashant

SQL>

Hope It Helped!

Prashant Dixit

Posted in Uncategorized | Tagged: newfeatures, oracle, performance, Tuning | 2 Comments »

Posted by FatDBA on April 8, 2023

Hi All,

Oracle on April 3, 2023 announced a free version of Oracle Database 23c (Release 23.0.0.0.0 – Developer-Release). Oracle Database 23c Free—Developer Release is available for download as a Docker Image, VirtualBox VM, or Linux RPM installation file, without requiring a user account or login.

Oracle 23c has a long list of new features, example Boolean datatype, No select from DUAL table just select it from the expression, lock free DMLs, joins in UPDATE & DELETE statements, 4096 columns in a table. Some of the development related additions i.e. drop table if exists, create table if not exists, Java script in the database (MLE), Multiple rows in a single insert command, store data as JSON and as relational both etc.

This long weekend gave me an opportunity to test the new Oracle 23c Developer release. This post is to explain the easy installation of the database on Oracle Linux 8 using RPM. You have to download Oracle 23c preinstall and core/main RPM file. Get it from the download link https://www.oracle.com/database/technologies/free-downloads.html

[root@mississauga files]#

[root@mississauga files]# ls

oracle-database-free-23c-1.0-1.el8.x86_64.rpm oracle-database-preinstall-23c-1.0-0.5.el8.x86_64.rpm

[root@mississauga files]# yum install oracle-database-preinstall-23c-1.0-0.5.el8.x86_64.rpm

Last metadata expiration check: 0:03:51 ago on Sat 08 Apr 2023 01:17:02 PM EDT.

Dependencies resolved.

=============================================================================================================================================================

Package Architecture Version Repository Size

=============================================================================================================================================================

Installing:

oracle-database-preinstall-23c x86_64 1.0-0.5.el8 @commandline 30 k

Installing dependencies:

compat-openssl10 x86_64 1:1.0.2o-4.el8_6 ol8_appstream 1.1 M

ksh x86_64 20120801-257.0.1.el8 ol8_appstream 929 k

libnsl x86_64 2.28-211.0.1.el8 ol8_baseos_latest 105 k

Transaction Summary

=============================================================================================================================================================

Install 4 Packages

Total size: 2.2 M

Total download size: 2.1 M

Installed size: 6.3 M

Is this ok [y/N]: y

Downloading Packages:

(1/3): libnsl-2.28-211.0.1.el8.x86_64.rpm 548 kB/s | 105 kB 00:00

(2/3): compat-openssl10-1.0.2o-4.el8_6.x86_64.rpm 4.5 MB/s | 1.1 MB 00:00

(3/3): ksh-20120801-257.0.1.el8.x86_64.rpm 3.5 MB/s | 929 kB 00:00

-------------------------------------------------------------------------------------------------------------------------------------------------------------

Total 7.9 MB/s | 2.1 MB 00:00

Oracle Linux 8 BaseOS Latest (x86_64) 3.0 MB/s | 3.1 kB 00:00

Importing GPG key 0xAD986DA3:

Userid : "Oracle OSS group (Open Source Software group) <build@oss.oracle.com>"

Fingerprint: 76FD 3DB1 3AB6 7410 B89D B10E 8256 2EA9 AD98 6DA3

From : /etc/pki/rpm-gpg/RPM-GPG-KEY-oracle

Is this ok [y/N]: y

Key imported successfully

Running transaction check

Transaction check succeeded.

Running transaction test

Transaction test succeeded.

Running transaction

Preparing : 1/1

Installing : ksh-20120801-257.0.1.el8.x86_64 1/4

Running scriptlet: ksh-20120801-257.0.1.el8.x86_64 1/4

Installing : compat-openssl10-1:1.0.2o-4.el8_6.x86_64 2/4

Running scriptlet: compat-openssl10-1:1.0.2o-4.el8_6.x86_64 2/4

Installing : libnsl-2.28-211.0.1.el8.x86_64 3/4

Installing : oracle-database-preinstall-23c-1.0-0.5.el8.x86_64 4/4

Running scriptlet: oracle-database-preinstall-23c-1.0-0.5.el8.x86_64 4/4

Verifying : libnsl-2.28-211.0.1.el8.x86_64 1/4

Verifying : compat-openssl10-1:1.0.2o-4.el8_6.x86_64 2/4

Verifying : ksh-20120801-257.0.1.el8.x86_64 3/4

Verifying : oracle-database-preinstall-23c-1.0-0.5.el8.x86_64 4/4

Installed:

compat-openssl10-1:1.0.2o-4.el8_6.x86_64 ksh-20120801-257.0.1.el8.x86_64 libnsl-2.28-211.0.1.el8.x86_64 oracle-database-preinstall-23c-1.0-0.5.el8.x86_64

Complete!

[root@mississauga files]#

[root@mississauga files]# dnf -y localinstall /root/Desktop/files/oracle-database-free-23c-1.0-1.el8.x86_64.rpm

Last metadata expiration check: 0:05:23 ago on Sat 08 Apr 2023 01:17:02 PM EDT.

Dependencies resolved.

=============================================================================================================================================================

Package Architecture Version Repository Size

=============================================================================================================================================================

Installing:

oracle-database-free-23c x86_64 1.0-1 @commandline 1.6 G

Transaction Summary

=============================================================================================================================================================

Install 1 Package

Total size: 1.6 G

Installed size: 5.2 G

Downloading Packages:

Running transaction check

Transaction check succeeded.

Running transaction test

Transaction test succeeded.

Running transaction

Preparing : 1/1

Running scriptlet: oracle-database-free-23c-1.0-1.x86_64 1/1

Installing : oracle-database-free-23c-1.0-1.x86_64 1/1

Running scriptlet: oracle-database-free-23c-1.0-1.x86_64 1/1

[INFO] Executing post installation scripts...

[INFO] Oracle home installed successfully and ready to be configured.

To configure Oracle Database Free, optionally modify the parameters in '/etc/sysconfig/oracle-free-23c.conf' and then run '/etc/init.d/oracle-free-23c configure' as root.

Verifying : oracle-database-free-23c-1.0-1.x86_64 1/1

Installed:

oracle-database-free-23c-1.0-1.x86_64

Complete!

[root@mississauga files]#

[root@mississauga files]#

[root@mississauga ~]# cd /etc/init.d

[root@mississauga init.d]# ls

functions oracle-database-preinstall-23c-firstboot oracle-free-23c README

[root@mississauga init.d]# /etc/init.d/oracle-free-23c configure

Specify a password to be used for database accounts. Oracle recommends that the password entered should be at least 8 characters in length, contain at least 1 uppercase character, 1 lower case character and 1 digit [0-9]. Note that the same password will be used for SYS, SYSTEM and PDBADMIN accounts:

Confirm the password:

Configuring Oracle Listener.

Listener configuration succeeded.

Configuring Oracle Database FREE.

Enter SYS user password: **********

Enter SYSTEM user password:

*******

Enter PDBADMIN User Password:

***********

Prepare for db operation

7% complete

Copying database files

29% complete

Creating and starting Oracle instance

30% complete

33% complete

36% complete

39% complete

43% complete

Completing Database Creation

47% complete

49% complete

50% complete

Creating Pluggable Databases

54% complete

71% complete

Executing Post Configuration Actions

93% complete

Running Custom Scripts

100% complete

Database creation complete. For details check the logfiles at:

/opt/oracle/cfgtoollogs/dbca/FREE.

Database Information:

Global Database Name:FREE

System Identifier(SID):FREE

Look at the log file "/opt/oracle/cfgtoollogs/dbca/FREE/FREE.log" for further details.

Connect to Oracle Database using one of the connect strings:

Pluggable database: mississauga.candomain/FREEPDB1

Multitenant container database: mississauga.candomain

[oracle@mississauga ~]$ sqlplus / as sysdba

SQL*Plus: Release 23.0.0.0.0 - Developer-Release on Sat Apr 8 13:57:09 2023

Version 23.2.0.0.0

Copyright (c) 1982, 2023, Oracle. All rights reserved.

Connected to:

Oracle Database 23c Free, Release 23.0.0.0.0 - Developer-Release

Version 23.2.0.0.0

SQL>

Done with the installation. Though there are multiple additions into Oracle 23c database, I would like to start with a quick one, insert multiple rows in a single INSERT command. If you use DBMSs such as MySQL or SQL Server, the syntax for inserting multiple rows into a table with a single statement is quite straightforward. Its now available in Oracle databases! 🙂

[oracle@mississauga ~]$ sqlplus / as sysdba

SQL*Plus: Release 23.0.0.0.0 - Developer-Release on Sat Apr 8 13:57:09 2023

Version 23.2.0.0.0

Copyright (c) 1982, 2023, Oracle. All rights reserved.

Connected to:

Oracle Database 23c Free, Release 23.0.0.0.0 - Developer-Release

Version 23.2.0.0.0

SQL> select name, open_mode from v$database;

NAME OPEN_MODE

--------- --------------------

FREE READ WRITE

SQL>

SQL> create table albumdetails (album_code number(10), albumrack_number number(10), albumreleaseyear number(20), albumtype varchar2(70));

Table created.

SQL>

SQL> insert into albumdetails (album_code, albumrack_number, albumreleaseyear, albumtype) values

('100','20','1999','Vinyl'),

('101','18','2008','cassattee'),

('102','01','1992','Vinyl'),

('103','05','1988','LPRecord'),

('104','05','2018','Vinyl');

5 rows created.

Next I will be posting each of the new features and experiments that I will be doing on 23c.

Hope It Helped!

Prashant Dixit

Posted in Uncategorized | Tagged: Database, newfeatures, oracle | Leave a Comment »

Posted by FatDBA on March 31, 2023

Recently I was talking to someone who is using OCI Cloud, she asked us a question – If she can generate the AWR report on one of the database, but not sure if they have Oracle Tuning & Diagnostic (T&D pack) license ?

As they are running on 19c Oracle EE Extreme Performance bundle, there not only she has license for Diagnostics & Tuning Pack, ADG, RAC, In-Memory but other options too from the Oracle EE-HP (Image below).

By the way “Oracle EE Extreme Performance” will not give any boost to your Database’s performance by giving you more CPUs or Memory, but it has options that will help you to get the high performance.

There is a great post by Sinan (from datbase-heartbeat) about the same subject.

Hope It Helped!

Prashant Dixit

Posted in Uncategorized | Tagged: oracle | Leave a Comment »

Posted by FatDBA on February 16, 2023

Hi Guys,

Recently someone asked me about Oracle debug 10027 trace event which we use in case of a deadlock scenario i.e. ORA-0060. Though the deadlock itself creates a trace file in DIAG directory, but 10027 trace event gives you a better control over the amount and type of DIAG information generated in response to the deadlock case. I mean the default trace file for deadlock (ora-60) contains cached cursors, a deadlock graph, process state info, current SQL Statements of the session involved and session wait history.

Event 10027 may be used to augment the trace information with a system state dump or a call stack in an attempt to find the root cause of the deadlocks. The minimum amount of trace information is written at level 1, at this level it will hardly contain deadlock graph and current AQL statements of the sessions involved.

In todays post I will try to simulate a deadlock scenario in one of my test box and will generate the 10027 trace with level 2 to get more information. Level 2 will give you cached cursors, process state info, session wait history for all sessions and the system state which is not possible in case of level 1. I am going to try with the Level 4 here as I want to get system state dump and don’t want to complicate this scenario.

I am going to create two tables – TableB is child table of TableA and some supporting objects to simulate the deadlock case.

Will try to simulate a deadlock scenario when insert/update/delete happens on TableB we need to sum the amount(amt) and then update it in TableA.total_amt column.

[oracle@oracleontario ~]$ sqlplus dixdroid/oracle90

SQL*Plus: Release 19.0.0.0.0 - Production on Thu Feb 16 03:58:12 2023

Version 19.15.0.0.0

Copyright (c) 1982, 2022, Oracle. All rights reserved.

Last Successful login time: Thu Feb 16 2023 03:04:03 -05:00

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.15.0.0.0

SQL> create table tableA (pk_id number primary key, total_amt number);

Table created.

SQL> create table tableB (pk_id number primary key, fk_id number references tableA(pk_id) not null, amt number);

Table created.

SQL>

SQL> CREATE OR REPLACE PACKAGE global_pkg

IS

fk_id tableA.pk_id%TYPE;

END global_pkg;

/

Package created.

SQL>

SQL> CREATE OR REPLACE TRIGGER tableB_ROW_TRG

BEFORE INSERT OR UPDATE OR DELETE

ON tableB

FOR EACH ROW

BEGIN

IF INSERTING OR UPDATING

THEN

global_pkg.fk_id := :new.fk_id;

ELSE

global_pkg.fk_id := :old.fk_id;

END IF;

END tableB_ROW_TRG;

/

Trigger created.

SQL>

SQL>

SQL>

SQL> CREATE OR REPLACE TRIGGER tableB_ST_trg

AFTER INSERT OR UPDATE OR DELETE

ON tableB

BEGIN

IF UPDATING OR INSERTING

THEN

UPDATE tableA

SET total_amt =

(SELECT SUM (amt)

FROM tableB

WHERE fk_id = global_pkg.fk_id)

WHERE pk_id = global_pkg.fk_id;

ELSE

UPDATE tableA

SET total_amt =

(SELECT SUM (amt)

FROM tableB

WHERE fk_id = global_pkg.fk_id)

WHERE pk_id = global_pkg.fk_id;

END IF;

END tableB_ST_trg;

Trigger created.

SQL>

SQL>

SQL> insert into tableA values (1, 0);

1 row created.

SQL> insert into tableA values (2, 0);

1 row created.

SQL> insert into tableB values (123, 1, 100);

1 row created.

SQL> insert into tableB values (456,1, 200);

1 row created.

SQL> insert into tableB values (789, 1, 100);

1 row created.

SQL> insert into tableB values (1011, 2, 50);

1 row created.

SQL> insert into tableB values (1213,2, 150);

1 row created.

SQL> insert into tableB values (1415, 2, 50);

1 row created.

SQL> commit;

Commit complete.

SQL>

Lets query the table and see the record count and next will delete an entry from tableB and won’t commit. At the same time will check the locks — TM lock on tableA.

SQL>

SQL> select * from tableA;

PK_ID TOTAL_AMT

---------- ----------

1 400

2 250

SQL> delete tableB where pk_id = 1415;

1 row deleted.

SQL> SELECT sid,

(SELECT username

FROM v$session s

WHERE s.sid = v$lock.sid)

uname,

TYPE,

id1,

id2,

(SELECT object_name

FROM user_objects

WHERE object_id = v$lock.id1)

nm

FROM v$lock

WHERE sid IN (SELECT sid

FROM v$session

WHERE username IN (USER));

SID UNAME TY ID1 ID2 NM

---------- ------------------------------ -- ---------- ---------- ------------------------------

440 DIXDROID AE 134 0

440 DIXDROID TX 589853 3078

440 DIXDROID TM 82284 0 TABLEB

440 DIXDROID TM 82282 0 TABLEA

I will now connect to another session (sesion 2) and delete from tableB and that will induce a locking scenario in the database and session 2 will go into hung/waiting state.

---- from session 2:

SQL>

SQL>

SQL> delete tableB where pk_id = 1213;

.....

.........

............. <HUNG > <HUNG > <HUNG > <HUNG > <HUNG >

Lets see more stats on the blocking session.

SQL>

SQL> SELECT (SELECT username

FROM v$session

WHERE sid = a.sid)

blocker,

a.sid,

' is blocking ',

(SELECT username

FROM v$session

WHERE sid = b.sid)

blockee,

b.sid

FROM v$lock a, v$lock b

WHERE a.block = 1 AND b.request > 0 AND a.id1 = b.id1 AND a.id2 = b.id2;

BLOCKER SID 'ISBLOCKING' BLOCKEE SID

-------------------- ---------- ------------- ------------------------------ ----------

DIXDROID 440 is blocking DIXDROID 427

And as expected 440 SID is now blocking 427. Now lets go back to the original (session 1) and will try to delete from tableB again and this will snipe the session 2 which is still in hung/wait state and will throw a deadlock error (ORA-00060). Though we have already collected blocking information from v$lock + v$session but to get more clarify about blocking sessions, I will set the 10027 trace event with level 2.

This will increase our chance of finding the root cause of the deadlocks. If the setting shall persist across instance startups, you need to use the initialization parameter EVENT.

i.e. EVENT=”10027 trace name contex forever, level 2″ otherwise use ALTER SYSTEM version of it.

------------------------------------------------------------

-- Execute below statement in session1

------------------------------------------------------------

delete tableB where pk_id = 1213;

---------------------------------------------------------------------------------------

-- Oracle is throwing deadlock error as below in session2

---------------------------------------------------------------------------------------

SQL>

SQL> ALTER SYSTEM SET EVENTS '10027 trace name context forever, level 2';

System altered.

SQL> delete tableB where pk_id = 1213;

delete tableB where pk_id = 1213

*

ERROR at line 1:

ORA-00060: deadlock detected while waiting for resource

ORA-06512: at "DIXDROID.TABLEB_ST_TRG", line 11

ORA-04088: error during execution of trigger 'DIXDROID.TABLEB_ST_TRG'

-- same captured in alert log too.

2023-02-16T04:02:57.648156-05:00

Errors in file /u01/app/oracle/diag/rdbms/dixitdb/dixitdb/trace/dixitdb_ora_29389.trc:

2023-02-16T04:03:00.019794-05:00

ORA-00060: Deadlock detected. See Note 60.1 at My Oracle Support for Troubleshooting ORA-60 Errors. More info in file /u01/app/oracle/diag/rdbms/dixitdb/dixitdb/trace/dixitdb_ora_29389.trc.

And we done, we were able to simulate the deadlock case in the database. Now lets dig into the trace file generated by the deadlock along with information flushed by 10027 trace event. It has all crucial information associated with the deadlock.

2023-02-16 04:13:34.115*:ksq.c@13192:ksqdld_hdr_dump():

DEADLOCK DETECTED ( ORA-00060 )

See Note 60.1 at My Oracle Support for Troubleshooting ORA-60 Errors

[Transaction Deadlock]

Deadlock graph:

------------Blocker(s)----------- ------------Waiter(s)------------

Resource Name process session holds waits serial process session holds waits serial

TX-00080009-00000C62-00000000-00000000 45 427 X 13193 24 440 X 22765

TX-0004001A-00000C1E-00000000-00000000 24 440 X 22765 45 427 X 13193

----- Information for waiting sessions -----

Session 427:

sid: 427 ser: 13193 audsid: 3390369 user: 115/DIXDROID

flags: (0x41) USR/- flags2: (0x40009) -/-/INC

flags_idl: (0x1) status: BSY/-/-/- kill: -/-/-/-

pid: 45 O/S info: user: oracle, term: UNKNOWN, ospid: 30174

image: oracle@oracleontario.ontadomain (TNS V1-V3)

client details:

O/S info: user: oracle, term: pts/1, ospid: 30173

machine: oracleontario.ontadomain program: sqlplus@oracleontario.ontadomain (TNS V1-V3)

application name: SQL*Plus, hash value=3669949024

current SQL:

UPDATE TABLEA SET TOTAL_AMT = (SELECT SUM (AMT) FROM TABLEB WHERE FK_ID = :B1 ) WHERE PK_ID = :B1

Session 440:

sid: 440 ser: 22765 audsid: 3380369 user: 115/DIXDROID

flags: (0x41) USR/- flags2: (0x40009) -/-/INC

flags_idl: (0x1) status: BSY/-/-/- kill: -/-/-/-

pid: 24 O/S info: user: oracle, term: UNKNOWN, ospid: 29847

image: oracle@oracleontario.ontadomain (TNS V1-V3)

client details:

O/S info: user: oracle, term: pts/2, ospid: 29846

machine: oracleontario.ontadomain program: sqlplus@oracleontario.ontadomain (TNS V1-V3)

application name: SQL*Plus, hash value=3669949024

current SQL:

delete tableB where pk_id = 1213

.....

.......

----- Current SQL Statement for this session (sql_id=duw5q5rpd5xvs) -----

UPDATE TABLEA SET TOTAL_AMT = (SELECT SUM (AMT) FROM TABLEB WHERE FK_ID = :B1 ) WHERE PK_ID = :B1

----- PL/SQL Call Stack -----

object line object

handle number name

0x8ed34c98 11 DIXDROID.TABLEB_ST_TRG

.......

.........

----- VKTM Time Drifts Circular Buffer -----

session 427: DID 0001-002D-000000D9 session 440: DID 0001-0018-0000001C

session 440: DID 0001-0018-0000001C session 427: DID 0001-002D-000000D9

Rows waited on:

Session 427: obj - rowid = 0001416A - AAAUFqAAHAAAMgvAAB

(dictionary objn - 82282, file - 7, block - 51247, slot - 1)

Session 440: obj - rowid = 0001416C - AAAUFsAAHAAAMg/AAE

(dictionary objn - 82284, file - 7, block - 51263, slot - 4)

.....

.......

Current Wait Stack:

0: waiting for 'enq: TX - row lock contention'

name|mode=0x54580006, usn<<16 | slot=0x4001a, sequence=0xc1e

wait_id=69 seq_num=70 snap_id=1

wait times: snap=18.173838 sec, exc=18.173838 sec, total=18.173838 sec

wait times: max=infinite, heur=18.173838 sec

wait counts: calls=6 os=6

in_wait=1 iflags=0x15a0

There is at least one session blocking this session.

Dumping 1 direct blocker(s):

inst: 1, sid: 440, ser: 22765

Dumping final blocker:

inst: 1, sid: 440, ser: 22765

There are 1 sessions blocked by this session.

Dumping one waiter:

inst: 1, sid: 440, ser: 22765

wait event: 'enq: TX - row lock contention'

p1: 'name|mode'=0x54580006

p2: 'usn<<16 | slot'=0x80009

p3: 'sequence'=0xc62

row_wait_obj#: 82284, block#: 51263, row#: 4, file# 7

min_blocked_time: 0 secs, waiter_cache_ver: 33942

Wait State:

fixed_waits=0 flags=0x22 boundary=(nil)/-1

Session Wait History:

elapsed time of 0.004677 sec since current wait

........

............

24: USER ospid 29847 sid 440 ser 22765, waiting for 'enq: TX - row lock contention'

Cmd: DELETE

Blocked by inst: 1, sid: 427, ser: 13193

Final Blocker inst: 1, sid: 427, ser: 13193

.....

.............

45: USER ospid 30174 sid 427 ser 13193, waiting for 'enq: TX - row lock contention'

Cmd: UPDATE

Blocked by inst: 1, sid: 440, ser: 22765

Final Blocker inst: 1, sid: 440, ser: 22765

.........

.............

The history is displayed in reverse chronological order.

sample interval: 1 sec, max history 120 sec

---------------------------------------------------

[19 samples, 04:13:16 - 04:13:34]

waited for 'enq: TX - row lock contention', seq_num: 70

p1: 'name|mode'=0x54580006

p2: 'usn<<16 | slot'=0x4001a

p3: 'sequence'=0xc1e

[14 samples, 04:13:01 - 04:13:15]

idle wait at each sample

[session created at: 04:13:01]

---------------------------------------------------

Sampled Session History Summary:

longest_non_idle_wait: 'enq: TX - row lock contention'

[19 samples, 04:13:16 - 04:13:34]

---------------------------------------------------

.........

..........

Virtual Thread:

kgskvt: 0x9b0e7f10, sess: 0x9ccdd4b8, pdb: 0, sid: 427 ser: 13193

vc: (nil), proc: 0x9db42028, idx: 427

consumer group cur: OTHER_GROUPS (pdb 0) (upd? 0)

mapped: DEFAULT_CONSUMER_GROUP, orig: (pdb 0)

vt_state: 0x2, vt_flags: 0xE030, blkrun: 0, numa: 1

inwait: 1, wait event: 307, posted_run: 0

lastmodrngcnt: 0, lastmodrngcnt_loc: '(null)'

lastmodrblcnt: 0, lastmodrblcnt_loc: '(null)'

location where insched last set: kgskbwt

location where insched last cleared: kgskbwt

location where inwait last set: kgskbwt

location where inwait last cleared: NULL

is_assigned: 1, in_scheduler: 0, insched: 0

vt_active: 0 (pending: 1)

vt_pq_active: 0, dop: 0, pq_servers (cur: 0 cg: 0)

ps_allocs: 0, pxstmts (act: 0, done: 0 cg: 0)

used quanta (usecs):

stmt: 57272, accum: 0, mapped: 0, tot: 57272

exec start consumed time lapse: 164377 usec

exec start elapsed time lapse: 18179246 usec

idle time: 0 ms, active time: 57272 (cg: 57272) usec

last updnumps: 0 usec, active time (pq: 0 ps: 0) ms

cpu yields: stmt: 0, accum: 0, mapped: 0, tot: 0

cpu waits: stmt: 0, accum: 0, mapped: 0, tot: 0

cpu wait time (usec): stmt: 0, accum: 0, mapped: 0, tot: 0

ASL queued time outs: 0, time: 0 (cur 0, cg 0)

PQQ queued time outs: 0, time: 0 (cur 0, cg 0)

Queue timeout violation: 0

calls aborted: 0, num est exec limit hit: 0

KTU Session Commit Cache Dump for IDLs:

KTU Session Commit Cache Dump for Non-IDLs:

----------------------------------------

........

.............

Hope It Helped!

Prashant Dixit

Posted in Uncategorized | Tagged: oracle, performance, troubleshooting, Tuning | Leave a Comment »

Posted by FatDBA on January 30, 2023

I’m excited to announce that I’ve authored my first blog post on Oracle’s multi-cloud observability and management platform.

My post “Real Application Testing for Capture and Replay in a PDB, a great addition in 19c.” recently got published by Oracle Corporation on their blogging platform 🙂 🙂 🙂 🙂

Hope It Helped!

Prashant Dixit

Posted in Advanced | Tagged: oracle, performance, troubleshooting | Leave a Comment »

Posted by FatDBA on January 4, 2023

CHA GUI (CHAG) is a graphical user interface for Cluster Health Advisor (CHA) which was earlier internal to Oracle teams but its now available to the customers. It is a standalone, interactive, real-time capable front-end/GUI to the classic CHA utility. Oracle 12.2 is the first version that is supported by CHA GUI (CHAG). You only require RAC license and there is no need for any additional license to use CHAG tool.

CHAG communicates directly with the Grid Infrastructure Management Repository (GIMR) using a JDBC connection.. GIMR is mandatory for CHAG to work as it fetches the data out of the GIMR repository. In case you don’t have the GIMR repo installed, for example on 19c databases as GIMR is optional there, you can use the local mode for CHAG to work, but in absence of GIMR mgmt repo you will not get the historical abilities to go back in time.

Installation is quite simple, you have to download and unzip the software on one of your cluster machines and I recommend not to dump it inside your ORACLE HOME, but in a separate place. CHAG requires X11 or XHost and Java as it uses Java Swing to open the GUI. CHAG can operate in several modes:

For the offline mode you can get the “mdb” file to analyze using below command. Depending on the time model you will get n number of mdb files for the period.

chactl export repository -format mdb -start <timestamp> -end <timestamp>

About the usage, CHAG is invoked using the ‘chag’ script available in the bin directory of the CHA Home. CHAG is designed primarily for Cluster or Database experts. Usage is quite simple and straight forward, you can move the pointer/slider to choose any particular timeframe to catch problems, their cause and the corrective actions. You can use it both in real time and offline version, its just that for real time you have to be on any of the cluster node, for offline you can generate the MDB file (cha datafile) and can run it anywhere on the client machine with no need of oracle home and only Java will be needed.

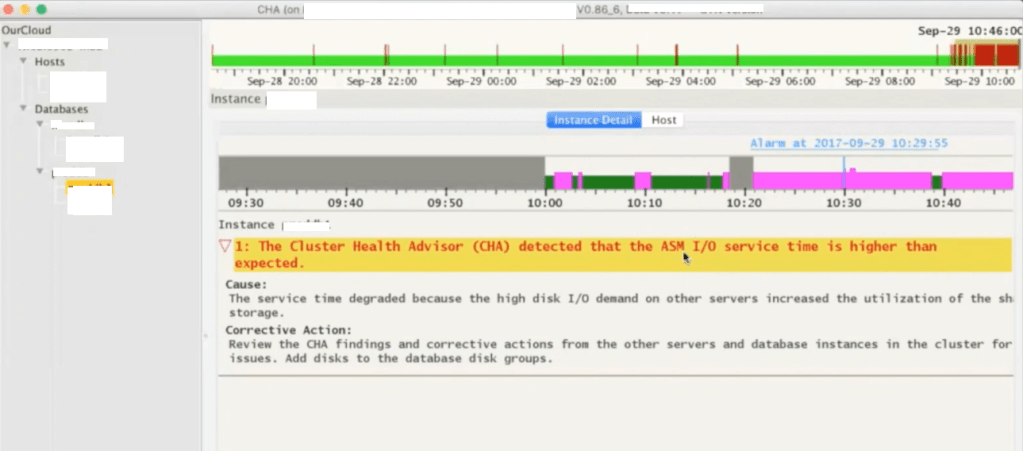

Below is the CHAG look and feel, its running on a 2 node RAC cluster where we have two databases installed. Here you see few color codes, where red colors means there were few problems during that interval.

Next is how it explains more about any particular problem caught for the timeslot. Gives you cause and the corrective action, for example in below screenshot it has detected that the ASM IO service time is higher than the expected which points to the underlying IO Subsystem used by ASM disks.

You can use SHIFT keys combinations to get wait event specific details for the selected time period.

You can use few other SHIFT key combinations to present the same data in the form of line graphs.

Few more examples or problems detected by the CHAG. This time it was reporting for redo log writes which are slower and that is something expected as ASM IO is slow too means the entire IO subsystem is impacted.

I highly recommend all readers to go through Doc ID 2340062.1 on metalink for more details on Cluster Health Advisor Graphical User Interface – CHAG.

Hope It Helped!

Prashant Dixit

Posted in Advanced, troubleshooting | Tagged: Database, performance, RAC, troubleshooting | Leave a Comment »